Quantum World, Optics, Relativity, Telecoms, Food, Nature & Cricket

Santanu Ganguly

Wednesday 7 January 2015

Monday 8 December 2014

Firewalling with (Cisco) Snort & Guardian Active Response, Best Practices

Firewalling with Snort & Guardian Active Response, Best Practices

Why do we need Snort?

¨ Many forms of attack can go completely undetected by casual observation

¨ Many modern attacks, such as DDOS, are impossible to prevent or contain using static firewall rules

¨ Snort is answer to demands of a cheap and automated solution

The first Snort post dealt with Snort on Ubuntu 14.04 from Sourcecode with Barnyard, SnortReport, Acid. The next bits are Snort sensor set-up. The Snort log for a sensor running on eth1 is processed via snort_stat.pl, e-mailed to “cert@our.org", compressed and stored. Snort is then restarted.

Automated Attack Response

Network intrusion detection systems are capable of mitigating attacks to some extent by applying the network access controls, such as firewall rules, or by directly terminating offending connections. For a review of snort attack response rules, please see http://blog.snort.org/2012/03/rule-category-reorganization.html

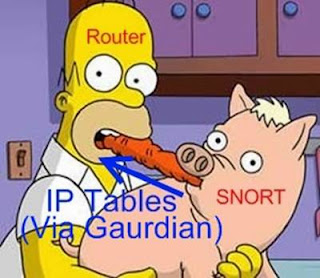

One of the flexible ways to use Snort for automatic attack response is with Guardian Active Response for Snort. Guardian is a security program which works in conjunction with Snort to automatically update firewall rules based on alerts generated by Snort. The updated firewall rules block all incoming data from the IP address of the attacking machine (the machine which caused Snort to generate an alert. There is also logic in place which prevents blocking important machines, such as DNS servers, gateways, other hosts with known ip addresses and whatever else is needed.

Here is a link that is recommended: http://www.symantec.com/connect/articles/understanding-ids-active-response-mechanisms . I found it very interesting on why one should use this software with great caution. J

Snort –

· Network intrusion detection and prevention system (IDS)

· Analyzes incoming traffic for signs of attack

o Protocol analysis

o Heuristic content matching

o Rule based

· Report generation

Guardian Active Response (GAR)– Active firewall modification scripts for several firewall programs

· Designed for Snort

· Whitelist for preventing unwanted blocking

· Written is Perl

· Supports watching multiple IPs

Why use Guardian? – Uses snort logs to dynamically block threats

SNORT Network Configuration Overview

Guardian Setup & Integration

· Installed on a dedicated machine: The Acronym Friendly Vast Lab Intrusion Detection and Prevention System (AFVLIDPS)

· Passive connection to hub sniffs incoming traffic without incurring additional delay

· There is a delay, however, between the start of the attack and the Guardian response

GAR Rules

· Avoid service interruptions due to false positives

· Creating rules requires nontrivial amounts of data and analysis

· Quality of Service

§ Restrict to times of day

§ Restrict based on attack frequency

§ Staged restrictions

GAR Performance

· Guardian can read the logs quickly

· MySQL logs are used to view reports and do not affect speed of system

· QoS - Quality of Service

§ Block all potentially harmful traffic?

§ Limit harmful traffic?

§ Leak a little traffic from harmful sources?

Data / Results

The whitelist of addresses which should never be blocked by the IDS are crucial for the automated response system. Even if the IDS invokes the response command upon seeing the attack in the full TCP stream only (thus preventing the DoS attack through blocking the access to mission-critical hosts), many IDS signatures have false positives that will result in disrupting of non-malicious connections. It is desirable to enable response only on reliable signature with no known false positives and additionally have the well-designed whitelist.

The tool supports Linux iptables, ipchains, ipfwadm, ipfw, and also commercial CheckPoint Firewall-1 and Cisco PIX firewalls.

Distributed IDS Setup

Networks with higher traffic load might justify deploying several dedicated sensor machines reporting to a single alarm database and console. To build a distributed IDS, one needs to install the components described above into different machines. The set-up is similar to the single host set-up described here. The difference is that now we need two (or more) base machines with Ubuntu.

Sensor Installation

Install base system, as described here. Set up Snort IDS with MySQL support:

# apt-get install snort-mysql

Please accept all default values during installation. Now Snort is set to log to a database on another host. The appropriate lines in snort.conf on every sensor machine are:

# output plugins output database: log, mysql, user=snort password=<the secret snort password here> dbname=snort_log host=<central-log-host-running-sql-name>

Every machine can have more than one Snort instance for the case of a distributed VLAN or a combination of LANs and VLANs.

Installation of Central Station

The Base system installation should be done as described above. MySQL server should be set up via “apt-get install mysql-server”. During the installation, network connections should be allowed to the SQL server.

Issues may start when granting permissions to access the database tables. One needs to repeat commands "grant" and "set password" for each host one want to connect to central database. For example:

mysql> grant CREATE, INSERT, SELECT, DELETE, UPDATE on snort_log.* to snort@<sensor1-name.our.org>; mysql> grant CREATE, INSERT, SELECT, DELETE, UPDATE on snort_log.* to snort@<sensor2-name.our.org>; mysql> grant CREATE, INSERT, SELECT, DELETE, UPDATE on snort_log.* to snort@<sensor3-name.our.org>;

The multi-machine setup needs to address the issue of maintaining signature sets. In the likely case that various machines are deployed in different environments with different security requirements , will lead to differences in rulesets. An internal sensor will need a different rule set from the one running outside the border router or in the DMZ.

Manual rule updates become adminitrative burdens if more than one sensor is deployed. One simple solution is to deploy the signatures on a central server and then retrieve them via ftp. If the signature sets are known in advance, i.e. the same signatures need to be disabled on each update, then "oinkmaster" from Snort can be used to distribute updates from a central server and handle the local ruleset customization on each sensor via the oinkmaster interface.

Each sensor may also have its own unique configuration, which is tuned using "include" directive in configuration file.

The security of the sensor-console communication is something that needs to be addressed too. If deemed necessary, the SQL communication between the sensor and the database can be done over secure shell(SSH).

Large Distributed IDS

In this case the central system is further separated, and the Web server and SQL server are installed on separate machines. This is a variation of the previous set-up. One needs to install sensors, then install the database on one machine, and deploy the Web server and ACID onto another machine. When setting up the IDS console, one should configure the source database as located at another machine. This will leave more CPU cycles for the database process.

Some Best Practices

Whether we are deploying an intrusion detection system on a large network with many sensors or a small network with a single sensor, we should deploy the device in as secure and logical a manner as possible. In this article some techniques value-added resellers (VARs) and security consultants use for securely deploying a network IDS on a customer's network have been addressed, focusing on Snort deployments, simply because Snort is easily available, widely used and OpenSource, even after Cisco bought them :-) ...however, these same principles can be used for other IDSes and applications.

VLAN placement

Determination of what the customer wants monitored. Traffic can either be monitored from the outside of the firewall, or it can be monitored on the inside of the firewall. I personally prefer the inside but like to keep a sensor on the outside in case I need to look at that traffic. tcpdump or a sniffer like Wireshark (formally Ethereal, now owned by Riverbed) can b sued to monitor the interfaces. This makes it easier to determine network problems and detect denial-of-service attacks.

Management network

The customer's IDS information should not be available to just anyone. To help protect this information, a management VLAN should be set up for the specific purpose of non-sniffing interfaces to sit on. If this is not possible or practical, then usage of iptables and TCP wrappers should be considered to restrict who can view what and from where.

Secure communication

HTTPS should only be used to view the IDS management and reporting interface. All communication between sensors and management consoles should be tunneled through SSH or Stunnel.

Secure access to management and reporting interface

The reporting interface should never be left unprotected. Even if the built-in access control of the application itself is being used, it should always be protected with Web server or file level permissions, depending on the Web server the customer is using. If it is on the main LAN, then access can also be lmited/controlled to it via iptables or TCP Wrappers.

Securing the management interface

All applications and utilities that are not needed should be removed, all ports that are not being used should be closed, and communication should be allowed to only what the sensor or manager needs to talk to. This is a basic policy in all matters of systems administration, and it is especially important when considering the sensitive data IDS sensors might pick up.

Reporting and checking

For management of the customer's IDS, it should be inspected regularly, perhaps every hour or two, and a log kept of the last time it was checked. All alerts from that time forward should be viewed so that nothing is missed. For monitoring Snort, BASE can also be used. It is based upon the ACID code that most people have used and are familiar with. It is, however, being actively developed and works much better than previously.

Tuning and updates

While not truly a secure deployment concern, tuning is one of the most important steps in deploying an IDS. If the customer becomes overwhelmed with irrelevant alerts, they will be more apt to ignore alerts as time goes by. So far as updates are concerned, both the rules and engine sould be kept up to date. The use of Oinkmaster is recommended for Snort deployments. It will update the rules on a regular basis and administrator will be notified via email of any changes. The use of SourceFire VRT rules is recommended for Snort installs due to the research and development that goes into them. They are built to the vulnerability rather then a particular exploit and are thoroughly tested by the SourceFire VRT team. One can register to receive them on Snort.org.

Syncing time

An NTP server should be set up on the management system and configure the sensors to update from it. This is key to determining what is happening and when on the network.

References

· “Design Of an Autonomous Anti DDos network” by Angel Cearns

· http://www.chaotic.org/guardian/

Monday 10 November 2014

Cisco ACI Fabric & Data Center Design Basics-Part I

Starting point of designing a Data Center (DC)?

Main query: Is there going to be virtualization or not? In a modern day DC, virtualization is not only a must but “ON” by almost default. If the DC is going to be virtualized, then is there requirement for a Layer2 fabric with an end-to-end protocol (such as Fabric Path or VXLAN) or, is the requirement more for a Layer3 fabric with IP.

Applications? -

· Fabric based: Virtualized DC , for example, with Fabric Path or VXLAN

· IP Based:

§ Big Data

§ Financial

§ Massive Scale

· Non-ip based: High Performance Computing (HPC) such as Ethernet, RDMA

over Ethernet etc.

Hence, main thing to address are the applications. What are the applications that are going to run in the DC? The first and foremost important thing in a virtualized DC are the applications running on virtual machines. From a high level point of view, there are two main groups of applications: IP based and non-IP based.

Examples of IP Based DCs are as follows:

· Big Data: Big Data has a distributed workload with huge amount of east-west traffic to process all the analytics that the Big data process requires.

· Financial or Ultra-Low-Latency (ULL) applications: Typically, in a financial environment, latency between two points are critical. Nominal switch latency is required. It is necessary to reduce the number of devices in the network because each device introduces a little bit of latency. Based on the ULL requirements, this can be a 2-tier, 3-tier or, even a collapsed single-tier network environment with 2 TOR switches with one switch for redundancy.

· Massive Scale Applications: Typically found in very large DCs with numerous different clusters.

Non-IP based application: High Performance Computing HPC or (Ethernet, RDMA over Ethernet, i.e., Layer 2 fabric)

Storage?: Next query in the line, after applications, is what kind of storage is being used? Is it going to be fiber channel (FC) or IP in the DC? Or will it be FCoE or IP? Will the storage be centralized or distributed? The devices and the design of the DC will typically depend on answers to the storage and SAN types.

Physical Constraints: Power, cooling, space (urbanism). Will there be larger modular chassis or smaller size devices? Will there be POD’s with Flexpods which are typically one logical unit running all the different applications that are critical for an elevated user experience. In a POD environment, the portal that the user connect to, connects, in turn, to a DC. One POD is a number of servers, storage and switches. Typical best practice is to start small with a POD.

Application Centric Infrastructure (ACI) Fabric Overview

Cisco Application Centric Infrastructure (ACI) fabrics use the Cisco Nexus 9000 Series Switches as the core of the transport system. The Cisco Nexus 9000 Series was designed from the foundation to meet the rapidly changing requirements of data center networks, while enabling the advanced capabilities of Cisco ACI. At the physical layer, the Cisco ACI fabric consists of a leaf-and-spine design, or Clos network. This design is well suited to the east-west traffic patterns of modern data centers, moving traffic between application tiers or components. Figure 1 shows a typical spine-and-leaf design.

As shown in Figure 1, with this design each leaf connects to each spine, and no connections are created between pairs of leafs or pairs of spines. Leaf switches are used for all connectivity outside the fabric, including servers, service devices, and other networks such as intranets and the Internet.

With this architecture a spine switch provides cross-sectional bandwidth between leaf switches, plus additional redundancy. Bandwidth is determined by the number of spines and number of links to each spine. Redundancy is dictated by the amount of bandwidth lost in the event of a spine failure. In the topology in the figure, a single spine failure would reduce overall bandwidth and paths by 25 percent because of the use of four spines.

Above this physical layer Cisco ACI uses a controller, the Cisco Application Policy Infrastructure Controller (APIC), to manage the data center network and its policy centrally. Cisco APIC not only provides central management and automation, but also a policy model that maps application requirements directly onto the network as a cohesive system for application delivery. For more information about Cisco APIC, see http://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/unified-fabric/white-paper-c11-730021.html .

This model provides full automation for the deployment and management of applications end to end, including Layer 4 through 7 policy, providing a single policy design, deployment, and monitoring point for applications. For more information about Cisco ACI and Layer 4 through 7 services, see http://www.cisco.com/c/en/us/products/collateral/cloud-systems-management/aci-fabric-controller/white-paper-c11-729998.html .

The Cisco Application Policy Infrastructure Controller (APIC) API enables applications to directly connect with a secure, shared, high-performance resource pool that includes network, compute, and storage capabilities. The following figure provides an overview of the APIC.

The Cisco Application Centric Infrastructure Fabric (ACI) fabric includes Cisco Nexus 9000 Series switches with the APIC to run in the leaf/spine ACI fabric mode. These switches form a “fat-tree” network by connecting each leaf node to each spine node; all other devices connect to the leaf nodes. The APIC manages the ACI fabric. The recommended minimum configuration for the APIC is a cluster of three replicated hosts.

The APIC manages the scalable ACI multitenant fabric. The APIC provides a unified point of automation

and management, policy programming, application deployment, and health monitoring for the fabric. The APIC, which is implemented as a replicated synchronized clustered controller, optimizes performance, supports any application anywhere, and provides unified operation of the physical and virtual infrastructure. The APIC enables network administrators to easily define the optimal network for applications. Data center operators can clearly see how applications consume network resources, easily isolate and troubleshoot application and infrastructure problems, and monitor and profile resource usage patterns.

APIC fabric management functions do not operate in the data path of the fabric. The following figure shows an overview of the leaf/spin ACI fabric.

The ACI fabric provides consistent low-latency forwarding across high-bandwidth links (40 Gbps, with a 100-Gbps future capability). Traffic with the source and destination on the same leaf switch is handled locally, and all other traffic travels from the ingress leaf to the egress leaf through a spine switch. Although this

architecture appears as two hops from a physical perspective, it is actually a single Layer 3 hop because the fabric operates as a single Layer 3 switch.

The ACI fabric object-oriented operating system (OS) runs on each Cisco Nexus 9000 Series node. It enables programming of objects for each configurable element of the system.

The ACI fabric OS renders policies from the APIC into a concrete model that runs in the physical infrastructure. The concrete model is analogous to compiled software; it is the form of the model that the switch operating system can execute. The figure below shows the relationship of the logical model to the concrete model and the switch OS.

All the switch nodes contain a complete copy of the concrete model. When an administrator creates a policy in the APIC that represents a configuration, the APIC updates the logical model. The APIC then performs the intermediate step of creating a fully elaborated policy that it pushes into all the switch nodes where the concrete model is updated.

Note: The Cisco Nexus 9000 Series switches can only execute the concrete model. Each switch has a copy of the concrete model. If the APIC goes off line, the fabric keeps functioning but modifications to the fabric policies are not possible.

The APIC is responsible for fabric activation, switch firmware management, network policy onfiguration, and instantiation. While the APIC acts as the centralized policy and network management engine for the fabric, it is completely removed from the data path, including the forwarding topology. Therefore, the fabric can still forward traffic even when communication with the APIC is lost.

The Cisco Nexus 9000 Series switches offer modular and fixed 1-, 10-, and 40-Gigabit Ethernet switch configurations that operate in either Cisco NX-OS stand-alone mode for compatibility and consistency with the current Cisco Nexus switches or in ACI mode to take full advantage of the APIC's application policy-driven services and infrastructure automation features.

ACI Fabric Behaviour

The ACI fabric allows customers to automate and orchestrate scalable, high performance network, compute and storage resources for cloud deployments. Key players who define how the ACI fabric behaves include the following:

· IT planners, network engineers, and security engineers

· Developers who access the system via the APIC APIs

· Application and network administrators

The Representational State Transfer (REST) architecture is a key development method that supports cloud computing. The ACI API is REST-based. The World Wide Web represents the largest implementation of a system that conforms to the REST architectural style.

Cloud computing differs from conventional computing in scale and approach. Conventional environments include software and maintenance requirements with their associated skill sets that consume substantial operating expenses. Cloud applications use system designs that are supported by a very large scale infrastructure that is deployed along a rapidly declining cost curve. In this infrastructure type, the system administrator, development teams, and network professionals collaborate to provide a much higher valued contribution. In conventional settings, network access for compute resources and endpoints is managed through virtual LANs (VLANs) or rigid overlays, such as Multiprotocol Label Switching (MPLS), that force traffic through rigidly defined network services such as load balancers and firewalls.

The APIC is designed for programmability and centralized management. By abstracting the network, the ACI fabric enables operators to dynamically provision resources in the network instead of in a static fashion. The result is that the time to deployment (time to market) can be reduced from months or weeks to minutes. Changes to the configuration of virtual or physical switches, adapters, policies, and other hardware and software components can be made in minutes with API calls.

The transformation from conventional practices to cloud computing methods increases the demand for flexible and scalable services from data centers. These changes call for a large pool of highly skilled personnel to enable this transformation. The APIC is designed for programmability and centralized management. A key feature of the APIC is the web API called REST. The APIC REST API accepts and returns HTTP or HTTPS messages that contain JavaScript Object Notation (JSON) or Extensible Markup Language (XML) documents. Today, many web developers use RESTful methods. Adopting web APIs across the network enables enterprises to easily open up and combine services with other internal or external providers. This process transforms the network from a complex mixture of static resources to a dynamic exchange of services on offer.

ACI Fabric

The ACI fabric supports more than 64,000 dedicated tenant networks. A single fabric can support more than one million IPv4/IPv6 endpoints, more than 64,000 tenants, and more than 200,000 10G ports. The ACI fabric enables any service (physical or virtual) anywhere with no need for additional software or hardware gateways to connect between the physical and virtual services and normalizes encapsulations for Virtual Extensible Local Area Network (VXLAN) / VLAN / Network Virtualization using Generic Routing Encapsulation (NVGRE).

The ACI fabric decouples the endpoint identity and associated policy from the underlying forwarding graph. It provides a distributed Layer 3 gateway that ensures optimal Layer 3 and Layer 2 forwarding. The fabric supports standard bridging and routing semantics without standard location constraints (any IP address anywhere), and removes flooding requirements for the IP control plane Address Resolution Protocol (ARP) / Generic Attribute Registration Protocol (GARP). All traffic within the fabric is encapsulated within VXLAN.

Decoupled Identity and Location

The ACI fabric decouples the tenant endpoint address, its identifier, from the location of the endpoint that is defined by its locator or VXLAN tunnel endpoint (VTEP) address. The following figure shows decoupled identity and location.

Forwarding within the fabric is between VTEPs. The mapping of the internal tenant MAC or IP address to a location is performed by the VTEP using a distributed mapping database.

Policy Identification and Enforcement

An application policy is decoupled from forwarding by using a distinct tagging attribute that is also carried in the VXLAN packet. Policy identification is carried in every packet in the ACI fabric, which enables consistent enforcement of the policy in a fully distributed manner. The following figure shows identification.

Fabric and access policies govern the operation of internal fabric and external access interfaces. The system automatically creates default fabric and access policies. Fabric administrators (who have access rights to the entire fabric) can modify the default policies or create new policies according to their requirements. Fabric and access policies can enable various functions or protocols. Selectors in the APIC enable fabric administrators to choose the nodes and interfaces to which they will apply policies.

Encapsulation Normalization

Traffic within the fabric is encapsulated as VXLAN. External VLAN/VXLAN/NVGRE tags are mapped at ingress to an internal VXLAN tag. The following figure shows encapsulation normalization.

Forwarding is not limited to or constrained by the encapsulation type or encapsulation overlay network. External identifiers are localized to the leaf or leaf port, which allows reuse or translation if required. A bridge domain forwarding policy can be defined to provide standard VLAN behavior where required.

Migration from Existing Infrastructure

Most existing networks are not built with a spine-and-leaf design, but may consist of various disparate devices typically configured in a three-tier architecture using a core-layer, aggregation-layer, and access-layer topology as shown in the following figure.

In this design, a single pair of switches is used at the aggregation layer and at the core layer, to provide redundancy for failure events. No more than two switches or routers are used at these tiers because of traditional Spanning Tree protocol constraints, which cause redundant links to be blocked, therefore negating the benefits of adding more devices.

In this model, the leaf switches are responsible for server connectivity and are then redundantly connected upstream to the aggregation layer. The aggregation provides connectivity between leaf switches and is typically also the point at which Layer 4 through 7 services are inserted. These services can consist of firewalls, load balancers, etc. Additionally, the aggregation layer is often the Layer 3 or routed boundary, or in some cases the core may provide this boundary.

This Layer 3 boundary design again must accommodate traditional Spanning Tree Protocol constraints and the need for Layer 2 adjacency for some server workloads. In addition, in this design the aggregation tier is the policy boundary for data center traffic. VLANs are typically created with one Layer 3 subnet within them. Broadcast traffic is allowed freely between devices within that subnet or VLAN. Policy (security, quality of service [QoS], services, etc.) is then applied only when traffic is sent to the default gateway to be forwarded between VLANs.

Customer Investment Protection

These topologies have been fairly standard for years. Therefore, customers have a large investment in the networking equipment that is in place. Other than in a completely new, greenfield environment, implementation of an all-new leaf-and-spine design will not be an option. Additionally, major changes to the existing physical or logical topology are typically not welcome because they can induce risk.

Because of these requirements, the design of Cisco ACI fabrics must include both compatibility with existing data center networks and the capability to easily integrate with those networks. The Cisco ACI fabric must be able to be inserted transparently into existing infrastructure while providing the same advantages of policy automation, linear scalability, and application mobility and visibility.

Cisco ACI is designed to provide integration with any existing network in any topology. Layers 2 and 3 can be extended into Cisco ACI, as well as Layer 3 data center overlay technologies such as Virtual Extensible LAN (VXLAN). Beyond these, the topology design and integration points must be carefully considered.

Because Cisco ACI focuses primarily on the design, automation, and enforcement of policy, the aggregation tier is the most logical insertion point for the Cisco ACI fabric. As stated earlier the aggregation tier is already responsible for policy enforcement and typically acts as the Layer 3 boundary. Therefore, traffic is already being moved to that tier for that purpose. Following figure provides a detailed view of the existing traffic pattern that will need to be integrated.

Cisco ACI provides three methods of integrating with existing network infrastructure, these methods are:

1. Cisco ACI Fabric as an Additional Data Center Pod

2. Cisco ACI Fabric as a Data Center Policy Engine

3. Cisco ACI Fabric Extended to Non-Directly Attached Virtual and Physical Leaf Switches

Method-1: Cisco ACI Fabric as an Additional Data Center Pod

This method utilizes a new pod build out to insert the ACI fabric. In this method, existing servers and services will not be modified or changed. ACI will be inserted as an aggregation tier for a new pod build out. This will act the same as attaching a new aggregation tier to an existing core for the purpose of a new pod. Following figure shows the traditional insertion of a new pod.

Traditional Method of Adding a New Data Center Pod

The traditional method to add a pod is to attach a pair of new Aggregation switches to the existing core. New access switches are then connected to this aggregation tier to support new server racks. This method allows for the addition of new servers with the additional stability of separating out the aggregation layer services between pods.

Using this same methodology a new pod can be added to the existing network using an ACI Fabric. Rather than attaching two Aggregation switches and several Access switches a small ACI Spine/Leaf Fabric can be added as shown in the following figure.

Adding a New Data Center Pod Using Cisco ACI

This methodology works in a very similar fashion to the traditional pod addition. Key difference is that with a Spine/Leaf topology everything will connect to the Leaf switches, thus the existing Core is shown connected to the ACI leaf switches, and not to the Spine.

With the physical topology in place, the logical topology will need to be built. Cisco ACI is designed to integrate seamlessly with existing network infrastructure using standard protocols. Connections to outside networks are supported using OSPF, BGP, VxLAN and VLANs. The connection from the ACI leaf switches to the Core switches can be made using any of these as shown in the following figure:

Using Cisco ACI as a new data center pod provides investment protection for existing infrastructure while allowing growth into the benefits of the ACI Fabric. This methodology requires no topology, connectivity, or policy changes for existing workloads while providing a platform for ACI for new applications and services.

Next Up: Understanding the Switch Fabric Architecture….

Courtesy of Cisco Live 2014

…and ACI and OpenStack.

Courtesy of Cisco Live 2014

…and a design example with Ubuntu KVM, ACI and….

…APIC.

Tabloid Time: The Aliens Are a'Landing ?!.. ;-)

Fireball across Ausin, Texas (16th Feb 2009). According to BBC, apparently, its NOT debris from a recent satellite collision...: http://news.bbc.co.uk/1/hi/world/7891912.stm

http://us.cnn.com/2009/US/02/15/texas.sky.debris/index.html

Same in Idaho in recent times. NO meteor remains found yet: http://news.bbc.co.uk/1/hi/sci/tech/7744585.stm

Exactly same in Sweden: http://news.bbc.co.uk/1/hi/world/europe/7836656.stm?lss

This was recorded on 25th Feb 2007 in Dakota, US:

http://www.youtube.com/watch?v=cVEsL584kGw&feature=related

This year has seen three of the spookiest UFO videos surface, with people in India, Mexico and even in space, NASA, spotting things they couldn't explain: http://www.youtube.com/watch?v=7WYRyuL4Z5I&feature=related

CHECK out this one on 24th Januray, 2009 in Argentina close to Buenos Aires:

You tube: www.youtube.com/

Press: Press Coverage

AND Lastly, and more importantly, from Buzz Aldrin on Apollo 11 : http://www.youtube.com/watch?v=XlkV1ybBnHI

Heh?! Don't know how authentic these news are... don't even know if these are UFO's or meteors or ball lightning or something else. But, if meteors, then where are the meteorites ? However, I see no reason why life cannot exist in other planets and why they could not be sneaking around here :-) . I for one, have long suspected some of my relations to be space aliens or at least X-people from X-files :-)

I am waiting for a job on an Alien spaceship myself. :-)

Giraffes in Parallel Universe

At Lake Manyara